In today's rapidly evolving business landscape, artificial intelligence has emerged as a transformative force, enhancing operational efficiency and productivity.

However, as AI continues to advance, it also brings forth security risks that businesses must proactively address.

As a trusted vCISO service provider, we have received numerous inquiries from our clients expressing concerns about potential threats associated with AI usage.

In response to these concerns, we have carefully curated this article to shed light on the security risks of AI in business and provide practical recommendations for mitigating them effectively.

Our insights are drawn from the guidelines and best practices we have developed for our clients, empowering them to navigate the complex landscape with confidence.

Let's start by exploring some real-life risks and examples that can occur when using AI in the workplace.

It's important to be aware of these situations, so you can take the necessary precautions to avoid them:

Imagine uploading confidential company information unintentionally.

For instance, a lawyer might mistakenly share an unredacted contract, or a project manager might accidentally upload verbatim meeting minutes instead of a summarized version.

According to a recent analysis, it was found that 4 percent of companies utilizing AI had inadvertently submitted confidential company data at some point.

These incidents highlight the various security risks associated with AI usage.

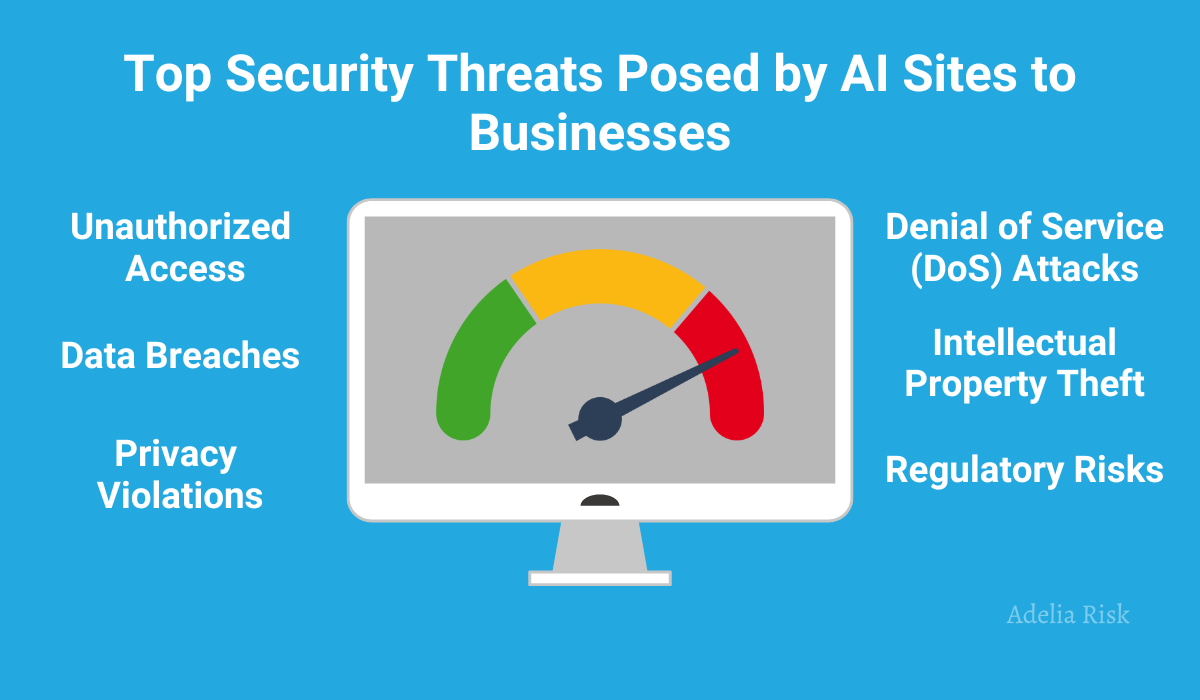

Such risks include the potential for data breaches, privacy concerns, intellectual property exposure, and ethical considerations.

To prevent such mishaps, it's crucial to establish clear guidelines for data handling and educate employees about the importance of reviewing and redacting information before using artificial intelligence.

Sometimes, even well-intentioned employees can unknowingly upload sensitive data to AI systems.

For example, a medical billing assistant might mistakenly upload a spreadsheet of patient data while trying to automate analysis.

This can lead to privacy breaches, compromise the confidentiality of personal information, and violate regulatory requirements such as HIPAA (Health Insurance Portability and Accountability Act).

To avoid such situations, organizations should enforce strict data access controls, provide comprehensive training on handling sensitive information, and establish clear rules regarding data submission to AI tools.

Uploading source code without undergoing proper review can expose your intellectual property and create security vulnerabilities.

When source code is shared without proper scrutiny, it may contain vulnerabilities that could be exploited by malicious actors, leading to security breaches, data leaks, or unauthorized system access.

We've seen instances where even big companies like Samsung faced this issue.

To safeguard your code, it's important to implement robust code review processes, restrict access to critical repositories, and emphasize careful validation when uploading sensitive source code to different platforms.

With the rise of AI, many new apps and sites have emerged. However, not all of them are trustworthy.

Some might be deliberately malicious or poorly run, posing security threats.

For example, certain websites might intentionally deceive users, aiming to collect personal or sensitive information for illicit purposes, such as identity theft or fraud.

These sites may also employ deceptive tactics, misleading users into sharing confidential data or installing malicious software on their devices.

To protect yourself, make sure to educate your employees about the potential dangers associated with unfamiliar AI sites and encourage the use of trusted and reputable platforms.

Even reputable AI vendors can experience data breaches.

Recently, ChatGPT encountered a data breach resulting from a bug in its source code.

This incident led to the unintended exposure of user chat history and personal information, including names, email addresses, payment addresses, credit card types, and the last four digits of payment card numbers.

To safeguard your data, it is crucial to exercise due diligence when selecting AI vendors.

Regularly reviewing their security practices and considering independent audits will help ensure that they have robust measures in place to protect your information.

As Microsoft and Google incorporate AI into email and productivity tools, there's a higher risk of accidental data uploads.

To avoid unintended exposure, educate your employees about these risks and stress the importance of reviewing content before sharing it through AI-powered tools.

Implementing thorough review processes and user awareness programs can help mitigate the potential for data leaks.

The rise of third-party AI tools promising increased productivity through email and document access permissions requires careful control and vigilance.

Without proper oversight, employees may unintentionally grant full access to their sensitive information and accounts.

This can lead to unauthorized access, data breaches, and potential exposure of confidential or proprietary information.

To mitigate this risk, establish clear rules on permissible AI services, enforce data access limitations, and carefully review permissions before granting access to third-party tools.

Without proper safeguards, you can put your business at risk of non-compliance with various regulations, such as HIPAA, GDPR, PIPEDA, CCPA, and others.

The consequences can include substantial financial penalties, legal liabilities, damage to reputation, loss of customer trust, and potential business disruptions.

Stay informed about the regulatory landscape and implement measures to ensure data privacy and security.

This includes data anonymization, access controls, and continuous monitoring to detect and rectify any compliance gaps.

AI-generated content may inadvertently violate copyright laws and source code licensing regulations.

Additionally, AI-generated content might not qualify for copyright or patent protections.

To prevent legal issues, establish clear guidelines on the use of AI-generated content, encourage human validation of results, and educate employees about the limitations of its materials.

AI tools are not infallible and can produce errors or "hallucinations."

Employees may unknowingly rely on incorrect data generated by these systems, assuming it to be accurate.

This can pose significant risks to businesses, as decisions and actions based on erroneous or misleading information can have detrimental consequences.

For instance, in industries handling sensitive data like healthcare or finance, relying on AI-generated insights may result in misinformed decision-making, leading to regulatory violations or breaches of confidential information.

Encourage critical thinking, validate results with human review, and provide training on the potential errors and limitations of these tools.

When using AI tools to generate source code, it's crucial to be cautious and proactive in managing potential risks.

This code can introduce bugs or security vulnerabilities if not carefully reviewed and addressed.

Therefore, it is essential to establish comprehensive code review processes, conduct thorough testing, and actively involve human developers in the evaluation and refinement of the generated code.

By taking these measures, businesses can ensure the integrity, reliability, and security of their codebase while leveraging the benefits of AI technology in software development.

Now that we understand the potential risks, let's explore some practical recommendations to help you keep safe while using artificial intelligence in the workplace:

The safest approach is to leverage the features offered by established platforms like Microsoft 365/Google Workspace or that are starting to be offered by cloud vendors like MS Azure and Amazon Web Services.

These platforms prioritize security and privacy, providing you with enhanced controls to safeguard your data.

By utilizing these trusted platforms, you can ensure that usage remains within a secure and controlled environment.

To maintain a secure environment, it's important to establish clear rules and approval processes for the usage of services.

Determine which tools are allowed and define an approval process for adding new tools or services.

This ensures that it aligns with your organization's security and compliance requirements.

Implement a clear policy that mandates the anonymization and redaction of any data submitted to AI tools.

Emphasize the removal of any references to your company, other organizations, individuals, and sensitive information.

While enforcing this policy may be challenging, it's critical to stress the importance of data privacy and anonymization to minimize the risk of unintended data exposure.

Establish clear rules that hold employees responsible for any mistakes made by AI systems.

Encourage employees to validate and review outputs, taking an active role in assessing the accuracy and validity of the information.

By promoting employee responsibility, you create a culture of accountability and minimize the reliance solely on AI-generated results.

To ensure better monitoring and control, discourage employees from purchasing their own subscriptions for AI when it comes to work purposes.

Instead, procure subscriptions centrally through your company.

This centralized approach allows for effective monitoring of proper usage, ensuring compliance with security policies and minimizing the risk of unauthorized access or data breaches.

Set clear penalties or sanctions for policy violations related to using artificial intelligence.

Make it evident that breaching usage policies will be treated seriously.

By establishing consequences for non-compliance, you create a culture of responsibility and adherence to guidelines.

Assign someone within your organization to review all new AI features in applications.

Ensure that these features are either disabled or configured correctly to align with your organization's security and privacy requirements.

Regularly reviewing and configuring features reduces the likelihood of unintended data exposure and ensures that tools operate within your established security framework.

Implement monitoring mechanisms to detect any unapproved subscriptions, such as reviewing new payments via accounts payable and monitoring DNS queries and web proxies for any unauthorized software.

These monitoring practices help identify any unauthorized tool adoptions and prevent potential security risks associated with unapproved usage.

Lockdown cloud services, such as Microsoft 365 and Google Workspace, so that security administrators must approve any new third-party integrations.

This control prevents unauthorized access and ensures that third-party tools meet your organization's security standards.

By carefully managing integrations, you reduce the risk of data breaches and maintain control over your environment.

Treat artificial intelligence like any other tool that processes or stores sensitive data.

Conduct legal reviews and privacy/security assessments, such as SOC2 compliance, to ensure that these tools meet the necessary privacy and security standards.

By subjecting them to rigorous assessments, you mitigate potential risks and ensure compliance with data protection regulations.

Consider implementing Data Loss Prevention (DLP) tools that can detect and prevent the unauthorized sharing of sensitive data, such as PHI or PII.

While this advanced application of DLP is typically suitable for mid-sized and larger companies, it can be a valuable addition to ensuring data security and preventing unintended data leaks.

Software companies utilize tools that scan open-source repositories to identify leaked source code and credentials.

While these tools are still evolving to cover AI systems, they provide an additional layer of protection for your codebase.

By detecting and addressing leaked code and credentials, you can mitigate potential security vulnerabilities.

When planning to implement or integrate AI into your organization, it's advisable to seek legal advice to address any privacy-related concerns.

An attorney can provide guidance on compliance with data protection regulations and ensure that your organization's initiatives align with legal requirements.

Finally, it's crucial to stay informed about the latest developments in AI technology, cybersecurity threats, and regulatory changes.

Regularly review and update your AI policies and security measures to align with evolving best practices and ensure ongoing compliance and data protection.

Embracing the power of artificial intelligence in the workplace offers tremendous benefits, but it's essential to navigate the associated risks with caution.

By implementing the practical recommendations outlined in this article you can create a secure workplace environment, protect your sensitive data, and ensure compliance with relevant regulations.

Stay proactive, stay informed, and enjoy the advantages while safeguarding your business from cyber threats.